What are the ethical issues?

Overview

When we seek to improve our teaching and learning practices through Assessment, Evaluation or Learning Analytics, we always need to be aware of the ethical issues inherent in each area. This chapter will cover some major ethical considerations for each.

Why is this important?

When we use learning technologies to support and engage our students, we inevitably will need to use the same or other technologies to assess, evaluate and support students in different ways. With the increase in the use of technology and more traditional practices in educational settings, its important that we consider the ethical issues that the use of these technologies and approaches may present, both to protect our students and to ensure our practices are effective in doing what we came here to do – support our students in their learning process.

Guiding Questions

As you’re reading through these materials, please consider the following questions, and take notes to ensure you understand their answers as you go.

- What’s your least favourite type of assessment to complete? Why?

- How do you know different assessment strategies are actually effective in measuring a student’s learning?

- Have you encountered any difficulties or challenges in using technology for assessment purposes as a student? Did they have anything to do with who you are?

- When engaging in research to evaluate the effectiveness of a series of lessons or learning materials, what ethical issues might come up?

- What data do you think is gathered from your institutions LMS / VLE? Did you know about it?

Key Readings

Lang, Charles; Macfadyen, Leah; Slade, Sharon; Prinsloo, Paul and Sclater, Niall (2018). The Complexities of Developing a Personal Code of Ethics for Learning Analytics Practitioners: Implications for Institutions and the Field. In: Proceedings of the 8th International Conference on Learning Analytics and Knowledge, ACM, New York, pp.436–440.

Morris, M. (2003). Ethical considerations in evaluation. In International handbook of educational evaluation (pp. 303-327). Springer, Dordrecht.

Rubel, A., & Jones, K. M. (2016). Student privacy in learning analytics: An information ethics perspective. The information society, 32(2), 143-159.

Assessment

As mentioned in previous chapters, assessment is a challenging practice to engage in, both for instructors and for learners. With the relatively recent developments in standards-based and/or criterion-based assessment strategies and ‘grading to a curve’, the original etymological notion of ‘sitting beside’ a learner may be difficult to implement in practice when everything has to be measured. While this section heavily emphasises the ethical issues with standardised testing, we should always be mindful of how these test-based ethical issues may apply to other strategies we use.

The videos below provide some context in how standardised tests work and in the 2nd video, Dr. Ibram X. Kendi explains why many standardised tests were originally created.

There are a number of different ways students can interpret assessment, and especially the use of rubrics and numerical labels of their learning. Generally, they either start from 100% and ‘make mistakes’ or are ‘deducted points’ meaning they must have done something wrong (creating a self-deficit mindset), or they start from 0% and they build up through their work to their achieved score (with the idea their work builds towards something, and is not in any way ‘bad’). These two different perceptions of a score on a test or assignment have everything to do with criteria, standards and rubrics and how they are framed and given to students.

Assigning a number to students inevitably leads to self-judgements, comparisons with others and labelling of students with a subjective value judgement. While this is the system that many students and teachers operate in currently, the ethical implications of this, both in terms of how students perceive themselves as well as the effectiveness of this approach for actually assessing learning. A Google Scholar search will come up with many ethical issues related to assessment, including different biases based on race, gender and ability. As the video above mentioned, reliability of assessments such as standardised tests may not even account for cultural knowledge or ways of knowing or being, thus providing inaccurate results in the attempt to measure student learning.

Wellbeing and Assessment

An interesting area of research that has been around for over 60 years explore Test Anxiety, and the resulting feelings in students that arise out of the pressure for good exam performance. This is such a prevalent phenomenon that Speilberger (2010) actually developed an inventory to categorise what it looks like. Additionally, there is so much research on the topic that speaks to an impediment of cognition when students are anxious, there is even research on what students can do to alleviate this issue (Mavilidi, Hoogerheide, & Paas, 2014). While it’s true that tests are not going anywhere, the ethical issue for an educator becomes a consideration when planning assessment strategies. Do we use a strategy that we may knowingly cause our students anxiety and negatively affect their performance, thus inaccurately measuring their learning, or do we choose a different path?

While these considerations can be made for assessment in general, another layer is added when we use computers and technology for assessment. As Schulenberg and Yutrzenka (2004) outline, student proficiency and attitudes towards technology, report interpretation of those administering assessments, and equivalence between paper and technology-based assessment are all potential ways in which the validity and reliability of computer-based assessment can be affected. While this study is now over 15 years old, the increase in technology-based assessment means that we need to pay more attention to how these issues and others may affect assessment.

Other options for assessment include strategies around Universal Design for Learning (UDL), Gamification and Open Assignments, that provide opportunities for engaging, fun and creative means of measuring learning. For more on these topics see What are the Emerging Trends in Pedagogy?

Distrust and Automated Racial Bias

Much of the ethical issues surrounding assessment and its relationship with technology have to do with the application of technology to monitor students’ assessment behaviours.

Many concerns have been raised, especially after the COVID-19 pandemic in 2020, that online proctoring and invigilation tools present too many problems to justify their use, with most of this born out of exam cheating. Given that exams and high-stakes tests are a great cause of anxiety for many students, resulting in poorer performance, it kind of makes sense why students feel the need to take a shortcut.

In the video below, you can see how these proctoring tools may collect LA data on students through their video feed. This obviously raises concerns, not only with the ethics of collecting this data, a student’s inability to opt out, but also in terms of privacy, with teachers essentially entering the homes of their students without any choice in the matter.

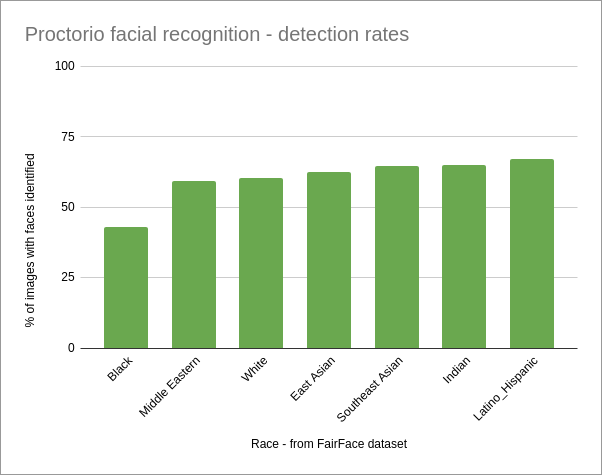

Another issue of concern is that many of the algorithms used in these tools have been found to be racially biased towards lighter-skinned students. In a particularly challenging scenario, those taking the national Bar exam to become a lawyer in the united states were unable to access their tests because of their skin colour. This issue unfortunately had the potential to perpetuate inequality, given that 85% of lawyers in the US at the time were caucasian.

Graph: Akash Satheesan, ProctorNinja

While there are strategies and workarounds for these issues, the burden is usually placed on the student to ‘fix’ not the instructor or the technology company providing these tools.

Being mindful then, of the technologies we choose to use in our assessment strategies and their potential effects on our students is an important consideration to ensure the ethical use of technology for assessment.

Evaluation

In previous chapters, engaging in evaluation is much like the act of conducting research, so many of the principles around the ethical treatment of participants (those involved in participating in research) also apply. If we conduct interviews, focus groups, or even online surveys, we need to make sure that these individuals are not in any way harmed by the process that benefits our effort to understand something further.

Simons (2006) outlines a few other example scenarios that provide insights into what ethical issues may arise in educational evaluation. These are less tied to the protection of people participating in actual research, but more about power dynamics and attempts to coerce others into action. Additionally Newman and Brown reported on their findings that asked different parties about their perceived frequency of ethical violations of standards outlined in standards for program evaluation (2010), outlined in the table below.

| Evaluator | Program Administrator | Program Staff |

|---|---|---|

| Evaluator selects a test primarily because of his or her familiarity with it | Evaluator selects a test primarily because of his or her familiarity with it | Evaluator selects a test primarily because of his or her familiarity with it |

| Evaluation responds to the concerns of one interest group more than another | Evaluation responds to the concerns of one interest group more than another | Evaluation responds to the concerns of one interest group more than another |

| Evaluator loses interest in the evaluation when the final report is delivered | Evaluation is conducted because it is “required” when it obviously cannot yield useful results | Evaluation is conducted because it is “required” when it obviously cannot yield useful results |

| Evaluator fails to find out what the values are of right-to-know audiences | Limitations of the evaluation are not described in the report | Evaluation is so general that it does not address differing audience needs |

| Evaluator writes a highly technical report for a technically unsophisticated audience | Evaluator conducts an evaluation when he or she lacks sufficient skills and experience | Evaluation reflects the self interest of the evaluator |

Brown, 1996)

What we can derive from these two sources (and their associated references) is that issues such as who the evaluation is actually for, what useful results it can yield and the focus of the evaluation can all be ethical issues that stand in the way of a successful evaluation project. Considering all the factors involved in an evaluation project including access to finding, interested parties and beneficiaries of the findings, and ensuring that the focus of the evaluation is chosen in the first place are all important issues – issues which again link to the questions we ask.

Learning Analytics

In terms of LA, the ethics of this practice is inextricably linked to student privacy. As use of online learning platforms grows, the data that these systems gather on individuals as well as aggregate data that combines many individuals’ data presents many questions for student privacy. As we all adaot to a world in which our own actions online are a sellable commodity amongst private companies, this practice recording interactions and behaviours in an educational setting comes with governmental and institutional oversights embedded.

As Rubel (2016) discusses, questions around who the data is for, who should have access, what is collected, how it is collected, the value of such a practice in broader terms and student agency are all important issues to consider. Rubel outlines 4 recommendations (p.156):

- Learning analytics systems should provide controls for differential access to private student data;

- institutions must be able to justify their data collection using specific criteria—relevance is not enough;

- the actual or perceived positive consequences of learning analytics may not be equally beneficial for all students, and the cost, then, of invading one student’s privacy may be more or less harmful, and we need a full accounting of how benefits are distributed between institutions and students, and among students; and finally,

- in spite of legal guidelines that do not require institutions to extend students’ control of their own privacy, they should be made aware of collection and use of their data and permitted reasonable choices regarding collection and use of that data.

Much like other discussions of assessment and evaluation, there are many levels within an organisation that must address ethical and student privacy concerns. One way that individuals can confront this issue is to work within the confines of a personal code of ethics with regards to the handling and action upon learning analytics (Lang, et al 2018). At higher levels policies and practices around access, usage and actions based on educational data should be established in line with Data Governance practices. A few authors have already put forth models for and posed questions around Data Governance for LA that highlight different parties’ motivation, ownership of data, level of access and scope of data collection (Graf, et al, 2012; Elouazizi, 2014).

As Machine Learning and AI begin to be leveraged in educational settings, the concepts around ethics and privacy in LA practices may require even more consideration and oversight.

Key Take-Aways

- Assessment strategies as well as the technologies we may choose to assess our students can raise ethical questions, so it’s important to consider these aspects carefully.

- Evaluation, much like different forms of research, can have embedded ethical issues in practice, related to power dynamics, vested interests and understand of the problem trying to be solved.

- Learning Analytics and the collection of data on individuals and groups of students is a much-discussed issue in the area, given the parallels with the use of ‘big data’ in the private sector.

Revisit Guiding Questions

When you think about your own work, or your experiences as a student, what have you read about in this chapter that makes you reconsider the experiences you’ve had? As an educator, what would you do differently, and how would you explain the ethics of these issues to a colleague or classmate?

Conclusion / Next Steps

How we deal with the ethics of assessment, evaluation and learning analytics will be different for every reader. In the next and final chapter, we’ll explore the evolving relationship between Learning Analytics and Learning (Experience) Design, and what that means for teaching and learning practice.

References

Clark, M (2021) Students of color are getting flagged to their teachers because testing software can’t see them. Retrieved on Aug 23, 2021 from https://www.theverge.com/2021/4/8/22374386/proctorio-racial-bias-issues-opencv-facial-detection-schools-tests-remote-learning

Elouazizi, N. (2014). Critical factors in data governance for learning analytics. Journal of Learning Analytics, 1(3), 211-222.

Graf, S., Ives, C., Lockyer, L., Hobson, P., & Clow, D. (2012, April). Building a data governance model for learning analytics. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge (pp. 21-22).

Newman, D.L., & Brown, RD. (1996). Applied ethics for program evaluation. Thousand Oaks, CA:

Sage.

Mavilidi, M. F., Hoogerheide, V., & Paas, F. (2014). A quick and easy strategy to reduce test anxiety and enhance test performance. Applied Cognitive Psychology, 28(5), 720-726.

Schulenberg, S. E., & Yutrzenka, B. A. (2004). Ethical issues in the use of computerized assessment. Computers in Human Behavior, 20(4), 477-490.

Simons, Helen (2006) Ethics in evaluation. In, Shaw, Ian F., Greene, Jennifer C. and Mark, Melvin M. (eds.) The SAGE Handbook of Evaluation. London, GB. SAGE Publications, pp. 213-232.

Spielberger, C. D. (2010). Test anxiety inventory. The Corsini encyclopedia of psychology, 1-1.

Further Reading

Stommel, J. (2018) How to Ungrade. Retrived on Aug 23, 2021 from https://www.jessestommel.com/how-to-ungrade/

Yarbrough, D. B., Shulha, L. M., Hopson, R. K., & Caruthers, F. A. (2010). The program evaluation standards: A guide for evaluators and evaluation users. Sage Publications.

Did this chapter help you learn?

100% of 2 voters found this helpful.

Provide Feedback on this Chapter