What are the emerging trends in Learning Technology?

Overview

This chapter provides a review of trends in emerging Learning Technologies as well as their impact on teaching and learning, while this is not an exhaustive list presented in this chapter, many of the technologies here are on the minds of educators in various sectors, as a result you’re encouraged to learn more on your own how these, and other technologies, may be affecting your own workplace or learning context.

Why is this important?

Having an understanding of trends in educational and learning technologies, even if you don’t have close exposure to them in your work or study, is an important to know about because it lets us think beyond what we have access to. As many educators use the ‘toolbox’ metaphor, being able to understand the range of ‘tools’ that exist in different educational settings allows us to innovate in our teaching practice, so that we can prepare our students to engage in the world as it exists today.

Guiding Questions

As you’re reading through these materials, please consider the following questions, and take notes to ensure you understand their answers as you go.

- What technologies do you consider ’emerging’ in your workplace or learning context?

- How feasible would it be implement the technologies you’re reading about?

- What are the physical and psychological effects of engaging with these technologies?

Key Readings

Ferguson, R. (2012). Learning analytics: drivers, developments and challenges. International Journal of Technology Enhanced Learning, 4(5/6), 304–17.

Goksel, N., & Bozkurt, A. (2019). Artificial intelligence in education: Current insights and future perspectives. In Handbook of Research on Learning in the Age of Transhumanism (pp. 224-236). IGI Global.

Kavanagh, S., Luxton-Reilly, A., Wuensche, B., & Plimmer, B. (2017). A systematic review of Virtual Reality in education. Themes in Science and Technology Education, 10(2), 85-119.

Teaching Machines

When we think of learning technology, its amazing to know that they are much older than we think. While digital learning technologies are at the forefront of how we teach and learn in the 21st century, ‘Teaching Machines’ (early adaptive / personalised learning devices) and other such tools have been around for more than 75 years. Check out BF Skinner (see Behaviourism) talk about the advantages of his teaching machines, and notice the similarities of what he was trying to accomplish, and its parallels with the tools of today.

Audrey Watters, a prominent educational technologist and the mind behind HackEducation wrote a book called Teaching Machines: The History of Personalized Learning, all about early edtech, which is worth a look.

Learning Analytics (LA)

According to SoLAR (The Society for Learning Analytics Research), “Learning Analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising learning and the environments in which it occurs”.

This is a fairly broad definition, so learning can be used to understand everything from how an individual student logs in to a system, their location and the time of these logins, all the way to trends in enrolment for thousands of students through a particular degree.

LA overlaps with many other fields including Data Science, Data Visualisation, Statistics and AI and ML (see below), but the main goal of this field is to further understand learning experiences and to use what we learn to improve learning experiences for students. This can be done in many ways, but in a nutshell there are different types of analytics we can use.

- Descriptive Analytics tell us what is happening – they describe phenomena that is occurring during the learning process (e.g., how many times a student logs in, or trends in quiz results, etc.)

- Predictive Analytics use data to try to predict things about learners so that we can intervene and improve learning (e.g., trends in log-ins and quiz responses early in a class may tell us a student is on-track to fail a class)

In 2013, Lockyer, Heathcote and Dawson outlined a couple more categorizations of learning analytics to help researchers and educators understand what types of data could be used for what. In their paper they outlined 2 types:

- CheckPoint Analytics – Data points that inform the educator of the ‘status’ of the students, such as when they logged in, how often they do so, etc. These types of analytics can then lead to pedagogical interventions such as reminders or further encouragement to engage.

- Process Analytics – Data points that describe the process of learning, through engagement with resources or even other learners. This data can inform educators on the process through which learners go through to complete a task – the HOW of their learning process. From this data, educators can more fully understand what the students are doing to complete an assignment or project, and this can lead to different interventions to support this process, or even redesign the assignment or project.

MMLA

One question to think about is ‘Where all this data come from?’ The easy answer is that it comes from systems that collect it, namely learning platforms like an LMS – simply through the continued use of such systems, educational data is collected and LA work can be done. But given that learning is an experience and process that usually takes place outside of these systems, how can we say that LA actually informs teaching and learning practice?

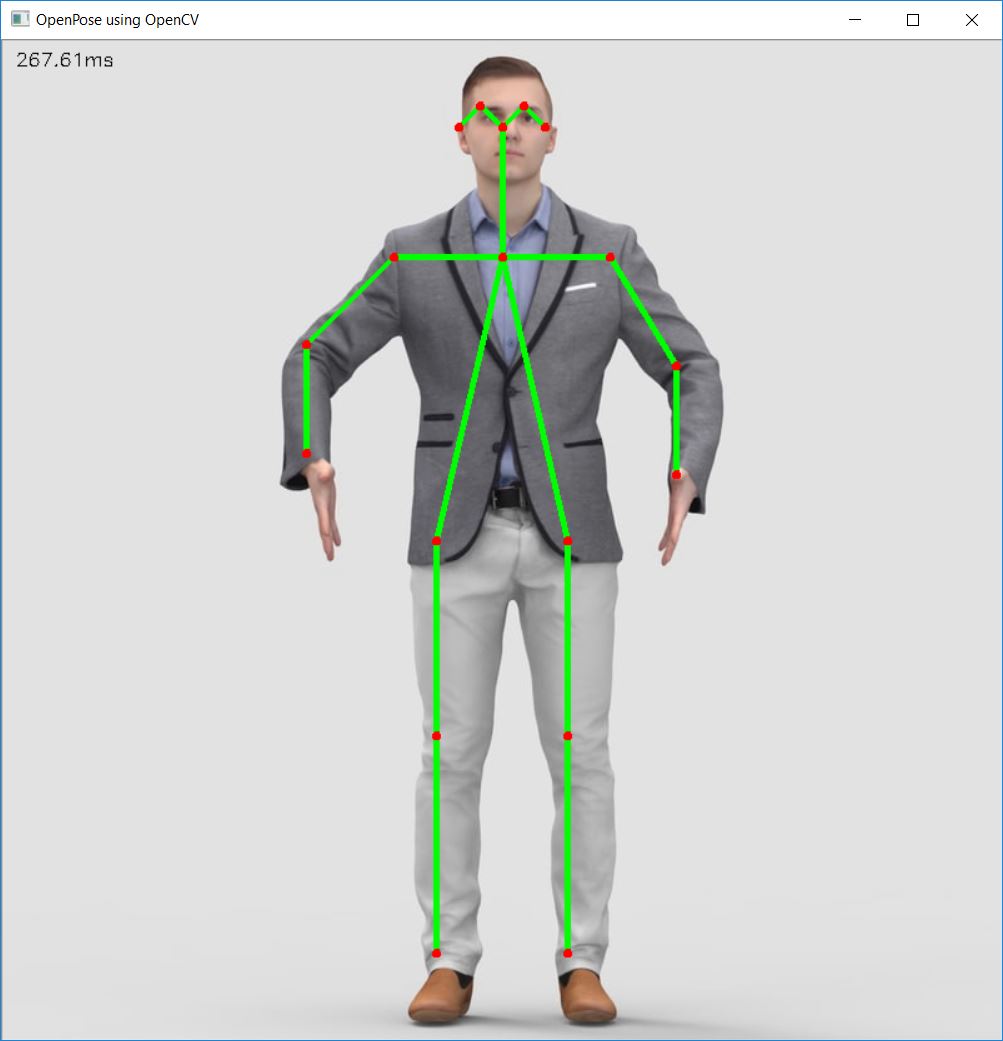

There is an area of research within LA called Multimodal Learning Analytics (MMLA), which does exactly this – it explores how people learn in the real world by collecting multiple data points (the Multimodal part) including LMS and various physiological data. The Transformative Learning Technologies Lab (TLTL) at Northwestern University in the US does great work in this area, using simple webcams, Microsoft Kinect devices, eye trackers, pupil dilation and wrist straps to gather data of students learning in the classroom, including their individual data and how they interact in a group setting. By exploring group dynamics and individual physiological measures, researchers can gain a better understanding of what learning is and how it works in different situations, the findings of which can inform how to teach in offline (classroom) environments.

LA Visualisations

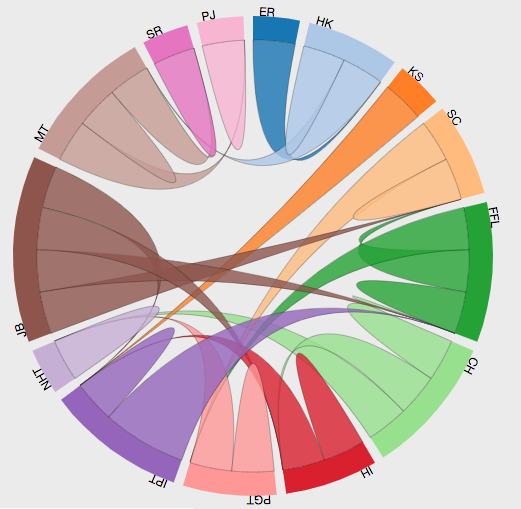

Much of the data we get from the systems that collect it comes in the form of raw data, meaning it a huge text file, or a giant spreadsheet, so from here the data needs to processed and sometimes visualised to help people understand it. A simple google image search brings up many different ways to visualise this data, which raises a common concern within the field – how to different people interpret this data? As Corrin (2021) points out in her blog post, when Learning Analytics is used in different educational settings, we cannot assume that everyone knows how to act upon this data appropriately – they need to be trained on what it means and what do do about it.

This is where LA overlaps with a field called Data Visualization – a field that focuses on creating graphical representations of raw data that are meaningful to those looking at them. This field is really important because it helps us understand how to present information in an ‘easy to digest’ manner, and tries to ensure that the same meaning is derived from a graph or chart, no matter who is looking at it.

Artificial Intelligence (AI) & Machine Learning (ML)

When we consider all the educational data that researchers and teachers have access to, there is simply too much to manually pour through, so this is where computers can help. Artificial Intelligence and Machine Learning provide tools that allow meaningful insights to be pulled from such data by using more advanced algorithms to automatically make meaning, where a human would have done so previously.

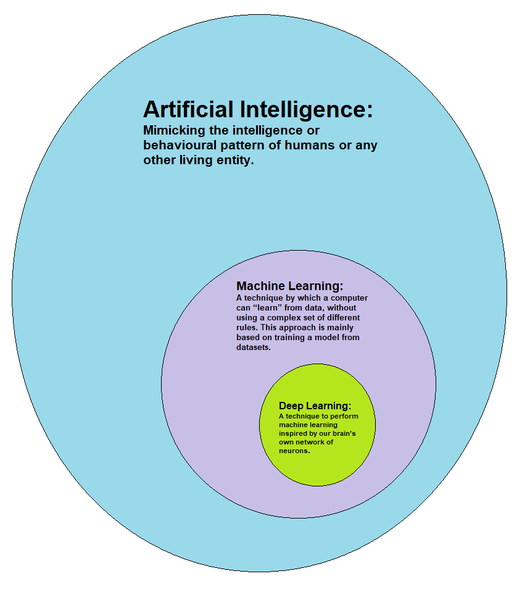

If you’re unfamiliar with the concepts of AI and ML, it’s quite a simple, but also a little confusing – if you don’t fully understand it, that’s completely ok.

- Artificial Intelligence (AI) – The capability of a system (usually a computer) to make its own decisions based on data and without human intervention (e.g., self-driving car, adaptive learning systems)

- Machine Learning (ML) – The process and the study of how computers improve their own processes through ‘practice’ or experience based on data. (e.g., identifying faces or objects in a photo based on other photos)

- Deep Learning – A set of methods within Machine Learning that use artificial neural networks to find and use representations of knowledge and categories to train itself. This can either be supervised fully by a human (helping it to ‘learn’ categories), partially supervised, or unsupervised (the algorithm trains itself fully and usually makes its own categories). (e.g., looking at every pixel in an image to identify features of that image, like specific colors in a cluster may form the shape of an eye on a face)

- Machine Learning (ML) – The process and the study of how computers improve their own processes through ‘practice’ or experience based on data. (e.g., identifying faces or objects in a photo based on other photos)

So what’s so important about stuff for learning? For most educators, not much as we don’t have access to many of these tools yet, but this is slowly changing. Many learning platforms like LMS/VLEs now have Learning Analytics tools built in. While most of these tools are descriptive in nature (see above), some are predictive or provide a deep analysis of the data.

For example, if i want to understand what my students are talking about online, I could go beyond simple word counts to leverage AI and ML to figure out the nature of their conversation using semantic analysis. This means that I can look at all the words in all the posts in an entire class or across classes, to understand what they’re saying. By training an ML model to identify certain phrases, key words and other traits, I can then start to gain further insights into my students’ learning processes. If my students are talking about learning theory and pedagogy more than technology, I could train my ML model to analyse this data automatically and tell everyone where the emphasis of the conversation lies. I could even use this same semantic analysis to even infer the mood of my learners based on the words they are using.

In more direct applications AI and ML can be used in adaptive and personalised learning applications, using the data collected by learners as they progress through a system to tailer that learning experience directly to them. While this is an exciting prospect, and ties into the concept of ‘Learning Engineering’, some educators are not a fan of this idea, relegating learning to just a rat in a maze or a hamster on a wheel, not being exposed to different ideas that may challenge the learner, but only ‘feeding’ them what they need to meet a very specific end goal…more akin to a machine… What do you think?

Generative AI

Generative AI (Artificial Intelligence) is a class of online software tool that creates content for us, whether text, images or even audio. It can even look at text, images and video and work with it, including telling us what’s in an image or a video, or summarising text for us.

These types of technologies are based on concepts of machine learning and neural networks whereby computer models that respond to natural language prompts we give them (e.g. “Tell me all about how technology can impact learning in a Year 3 maths classroom”) can generate content around the specific prompts we give them. Since the advent of this technology in 2022 and before, many educators have had discussions about their pros and cons and how it may impact learning and assessment.

If you’d like to know more about how Generative AI works, check out the video from Google below – this is VERY technical, FYI.

Issues around Generative AI

For many educators, they are concerned about how assessment that requires the generation of content looks like in the future. As we continue to explore this technology, a more nuanced viewpoint is emerging – that these technologies are here, and will be incorporated into a new digital literacies, so to prepare our students for a world in which genAI exists, we should be teaching our students how to use them and embedding them where appropriate.

There are of course implications for assessment design from educators’ perspective (e.g., as these things write essays, students can easily cheat, right?), but they also present so many opportunities to enhance learning. Whether it through providing feedback, fostering creativity, supporting study strategies, or simply using a genAI agent (chatbot) as a study tutor, tools like chatGPT, Bing, and Google Gemini are changing teaching and learning as we know it.

For more on Generative AI, check out the resources below.

- Assessment Reform for the age of artificial intelligence (TESQA)

- Fire, fad, or foe: Will generative AI empower or overpower educators? (Lecture by Danny Liu of USyd – September 2023 – 1hr)

What about Teachers and Learning Designers?

Most of the discussion around genAI in education focuses on learners and students, but it also is very powerful for teachers. It can summarise topics for us, even create lesson plans from scratch, or provide feedback on assessment instructions to ensure our students understand what is being asked of them. There are many uses for genAI in our own practice, but also many aspects of its use to consider, including ethical and privacy issues. For example, what does it mean to have genAI provide feedback to our students instead of us? Should we do this, or should we use it as a formative guide for our students and still provide summative feedback based on our own expertise? Who has access to the prompts we give these tools, and do they have access to our private information if we use publically available tools or tools provided to us by our employers?

There are obviously many questions yet to answer, as these technologies seem to advance every week, so having a good awareness of their capabilities and the issues surrounding them can be helpful.

NOTE: The following paragraphs about ChatGPT were written by ChatGPT with the prompt “Give me a 2 paragraph summary of What ChatGPT is and what its potential for education might be.”

ChatGPT (one example of GenAI)

ChatGPT is a large language model created by OpenAI that uses deep learning to generate human-like responses to a wide variety of questions and prompts. Trained on massive amounts of text data, ChatGPT is capable of answering factual questions, generating creative writing prompts, providing advice, and engaging in conversation on a broad range of topics. With its ability to understand natural language and generate contextually appropriate responses, ChatGPT has the potential to revolutionize the way we interact with computers and automate a wide range of tasks.

In the field of education, ChatGPT has significant potential as a tool for personalised learning and student engagement. It could be used to answer students’ questions, provide feedback on written work, generate writing prompts or exam questions, and even to create personalised study plans based on individual learning styles and preferences. Additionally, ChatGPT could be used as a language learning tool, providing students with a conversational partner who can respond in real time and adapt to their level of proficiency. As ChatGPT continues to improve and become more sophisticated, its potential to transform the field of education is truly exciting.

The Internet of Things (IoT)

We all know what the internet is. The Internet of THINGS, however is perhaps a new concept to many, even though it’s everywhere. In a nutshell the internet of things refers to the network connection that many everyday ‘things’ are now gaining, starting from smart watches, to smart TVs, and smart microwave ovens and smart doorbells. Essentially anything you see the word ‘smart’ attached to these days related to technology will be part of the IoT, and these devices are usually linked into a central software hub, that you can control by traditional clicks and taps or by using your voice, or even you body movements. This concept is particularly of note in educational settings because of the ‘tinkering side’ of IoT.

Many schools now offer children and adults the opportunity to explore microcomputers such as the Raspberry Pi, Arduino, MicroBit, Tinker Board and others. These microcomputers are quite easy to connect to a network and interface with man consumer and custom hardware. Years ago, when hobbyists were creating their own automatic curtain openers, or developing switches to manually turn off lights, they probably never imagined that these devices would start to become mainstream, being offered by IKEA and other large home furnishing companies.

When it comes to their application in learning, most of the time its in the form of problem-based, scenario-based or inquiry projects, where students set out to explore a problem and then solve it using solutions that are up to them. What we will see more of in the future, are physical devices that serve the purpose of supporting learning, and a reduction in the exclusive use of screen-based devices to access the web, leading to new ways to create, explore, collaborate and innovate.

Adaptive Learning

This refers to educational systems that adapt to learners as they progress through them, using data gathered from the learners’ interactions to inform the next step of the learner’s experience suited to their individual needs. This has been around for many years in the form of more self-paced learning experiences ‘branch’ or redirect learners to different learning materials to support them if they don’t fully understand a topic. This usually requires input from the learner, either in terms of answers to quiz questions and surveys or selection of a topic of their own choice.

Common tools for creating adaptive learning experiences can be an LMS (using something called ‘selective release criteria’) or software tools like Articulate Storyline 360 or Adobe Captivate. Both of these tools allow for the creation of branched scenarios in the presentation of information, and other even allow for interactive software training, using ‘hotspots’ on the screen to detect where a learner has clicked, and therefore simulate a interactions on a computer.

There are many tools out there now that do this, and some are even leveraging AI and ML to create even more customised experiences by analysing time on task, responses to text based questions and other data to direct students where they need to go.

These tools are used in educational settings, but are much more common in private sector corporate training settings, as they allow students to work through them usually in one sitting, as opposed to being part of a continuous learning experience like a class or module of learning.

Collaborative Creation and Annotation

Though it’s been around for a long time, collaborative tools like Google Docs, and now Microsoft Word 365 allow us to collaborate in real-time to create documents, charts and other artefacts as if we were sitting in the same room, looking at the same computer monitor. Combine this with reliable video chat technologies, students can collaborate like never before, allowing them to provide feedback, work out ideas and co-create.

Building upon the Web 2.0 technologies that allowed us to create and democratise knowledge in new ways, now we seem to have opened the flood gates in terms of information exchange – there may be too much information out there. Now a whole group of technologies have popped up that help us organise and curate information we find online.

For the purposes of teaching and learning, collaborative annotation of a resource is a great way to take notes, and have a conversation about the topic literally on top of the resource itself. This book and this site use a platform called Hypothes.is to allow for collaborative annotation to take place and there are even a few tools that allow for the collaborative annotation of video, audio and PDF files, such as CLAS, the Collaborative Learning Annotation System.

Immersive Learning

Immersive learning refers to any technology that allows for an immersive experience. This could mean any one of the following technologies:

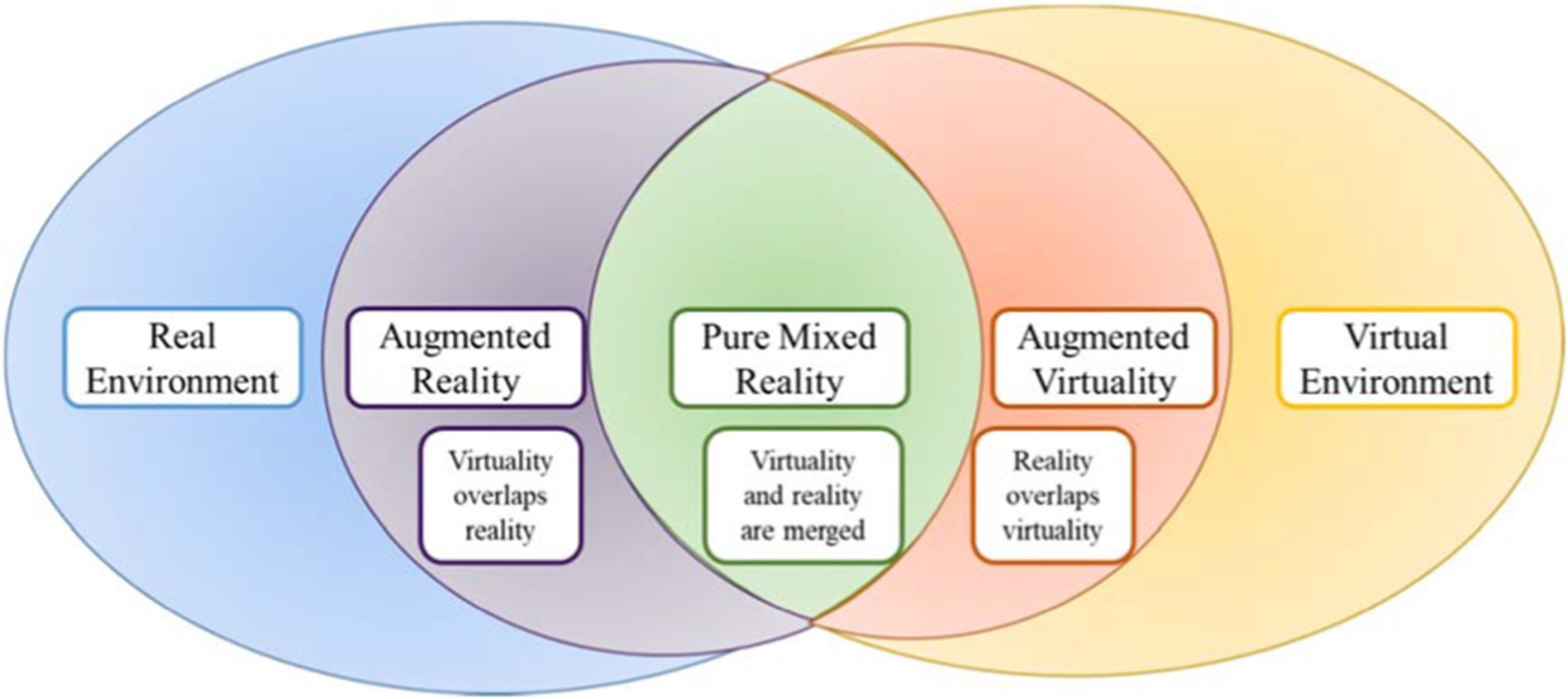

- 360 Video – This is a very basic immersive technology that uses 360 cameras to capture images and video, allowing users to experience an environment from all angles, not just where a traditional camera is pointing.

- Virtual Reality (VR) – Immersion in a virtual environment by wearing a Head Mounted Display (HMD) and using controllers or hands to interact with objects in that environment

- Augmented Reality (AR) – The use of smartphones, tablets or smartglasses to see digital objects and information overlayed on top of the real world around us

- Mixed Reality (MR) – Basically a mix of the two above – an HMD that sees the real world with digital objects overlayed

If you’re confused by all of these terms, Flavián, et al. (2019) has a good diagram of how all of these overlap, but what you can do is think of all of these technologies as Extended reality (XR).

Another common acronym used to describe these technologies in a group is XR (or Extended Reality) – this term overlaps VR, AR, and MR, so you can think of the ‘X’ as standing in for the V, A, and M where appropriate.

360 Video is probably the most accessible XR technology in that all it requires to create is a special camera and all it requires to view is any device really. 360 allow for multiple means of engagement, including using an HMD (Head Mounted Display) like a VR headset, or you can hold your phone in place and move it around yourself, or you can simply drag your finger or mouse around the scene. Below are a couple of examples of how this can be used for educational purposes.

The primary advantage of these technologies in learning is the ability to either physically interact with learning materials beyond touching a 2D screen, and to augment what the learner sees in the real world with information and contextualises it.

There are many applications for these sorts of technologies, including using VR in behaviourist-style training scenarios (e.g., operating machinery or medicine), using AR to learning about different physical objects or geological features, or using MR to design something in a physical space.

While there has been a lot of research done in terms of VR and MR applications in education there are some barriers that make their use challenging. First is the cost – in the last couple of years these devices have come down in price to as low as around US$300, when they used to cost thousands. As many people of all ages have smartphones now, this means AR is a technology that is much more prevalent because of the ubiquity of the devices it can run on.

Another factor is simply the affect on our bodies. In a line of research on the topic of ‘Cybersickness’, Palmisano (2017) explores how the sense of motion in VR is disrupted because our eyes see one thing and the motion-detecting mechanisms in our ears and certain parts of the brain don’t align, leading to motion sickness. As a result, VR users sometimes suffer vertigo, dizziness and other symptoms. This is another reason why some researchers and educators advocate for AR technologies more, simply because there is less of a health risk.

Additionally, there have been a few studies on the concept of ‘false memories’ as a result of VR experiences. False memories refer to the perception that an event has occurred in your life, even if it hasn’t. This phenomena occurs in all ages, but particularly with children and can usually be the result of mere suggestion based on the shared memories of those around us. For example, if a friend said you went for brunch two years ago and you don’t remember, you may shift your memory of certain events to understand that you did go, even though you didn’t. Another example is the use of edited photos to potentially change your memory of an event.

In a 2009 study, Segovia & Bailenson explored this phenomena with VR. First, children were told that they had experienced certain events such as playing with a mouse or swimming with orcas because their parents remembered it. Then some children were exposed to virtual reality ‘movies’ of themselves engaging in these events from a 3rd person perspective. These children were more likely to think the event actually occurred than kids who saw photos or had no ‘fake evidence’ that the event had occurred.

While VR does present an exciting step forward in immersion and multimedia learning, we as educators must always be mindful of any new technology that comes forth and the effects it may have on learning, much like generations before us began to worry about the dreaded television and internet.

With AR technologies, these issues don’t really exist because we have a grounding in our own worlds, and our brains perceive them differently.

Another potential issue with VR is the ethics of exposing people to potentially harmful situations. Some researchers and companies are exploring how VR users can be trained to be more empathetic based on the illusion that our virtual bodies are our own (Bertrand, et al., 2018). Some private training companies have extended this idea to diversity training, by having exployeers experience situations such as racially-motivated micro-aggressions and other harmful events in VR. VR has also been used for exposure therapy as well – if you’re afraid of flying, just hang out in a VR plane for a few hours and your fear will be addressed.

In the video below, this author outlines some implications for research and practice based on educational research in Cognitive Load Theory.

While the technology is exciting researchers in academia and in the private sector are slowly learning what works and what doesn’t work in terms of these types of interactions, and the technology is changing rapidly.

Among the many advancements in this technology, hand-tracking has added a new aspect to interactions within VR environments. Until a couple of years ago, the only way to interact with environments and objects in 3D virtual environments was to use controllers that were tracked either by the headset or by external devices connected to the same computer. With the release of Facebooks Oculus Quest self-contained HMD, hand tracking is now built into the software, allowing researchers, designers and educators a new avenue to explore.

Virtual 3D Objects

Another type of immersive experience, while not as new, is the ability to interact with 3D objects. This can be done using a computer, smartphone or in VR, with varying impacts on learning for each. As many artists and designers are sharing virtual objects, it is really easy to use them in learning materials and as part of a learning experience. The example below is taken from Sketchfab, an online marketplace where artists and designers can share their 3D models to be used however the purchaser would like.

This technology and a related technology called Photogrammetry, which is able to take a series of still photos and turn them into a 3D model, allows for exciting materials to be created in more academic fields like archeology. The example below uses a platform called Pedestal3D, an education-focused repository of 3D objects. The University of New England, Australia’s repository houses a number of interesting artefacts that learners can interact with, which is much more immerse than simply looking at a picture.

If you want to learn more about photogrammetry, and an alternate method to 3D Scanning, Light-based scanning, which affords faster and higher fidelity scans, check out an overview from UNE’s Digital Education Unit.

The Metaverse

The next step that big tech is looking to get into is the ‘metaverse’ – essentially a connected virtual world using Virtual Reality technologies – Facebook is so dedicated to this idea that the company was renamed to Meta in late 2021. In the video below you can see an overview of what this might look like using a platform called Spatial.io

Brining it all together

In schools, many students are learning tools that bring together elements of AR, programming, and IoT – these tools help to build problem-solving and critical thinking skills while also allow students to express their creativity through this integration.

Some educational tools to explore include CoSpaces, Tynker, and Kai’s Clan.

MicroCredentials

Another term for MicroCredential is a Badge, and while online badges have been around for years, they are still an emerging technology as they play a major role as educational organisations seeks to reframe what learning and credentials mean and how to incentivise engagement with learning materials and assessments for mini-courses they may wish to offer, or to embed in existing classes.

Linked to Gamification (see the previous chapter) digital badges allow for a reward-based system to be implemented within technology-enabled and online learning environments. Beyond simple digital badges, many badges are now considered ‘open badges’ (see Badgr), meaning that the badge can be issued by any organisation and then stored for ‘display’ on other systems as well (e.g., your online CV, professional network sites, etc.).

Badges provide a visible digital recognition for the completion of specific classes, programs or courses and can provide an easy demonstration of the acquisition of new skills that the learner can display wherever they like.

Finally, the NGDLE

In 2015 Educause, a prominent non-profit organisation that publishes the yearly Horizon Report, but out another report focusing on the NGDLE. A really fun-to-say acronym means Next General Digital Learning Environment

Read the original report here OR read a nice web-updated reflection on it here.

In a nutshell, this project sought to look at existing learning platforms like the LMS / VLE (yes we still use those terms) and critique them, and to think about what a next-generation platform should look like. This new way of delivering digital learning includes many technologies that are now ad-hoc, add-on technologies to existing platforms. Integrating all these pieces is slowly happening, but no platform has yet to do it successfully. With the advent of standards that allow more interoperability, like the use of APIs (Application Programming Interfaces) in modern web apps, and the LTI (Learning Tools Interoperability) standards that allow web apps to easily integrate into existing platforms, we are on our way, but it will take time for organisations and institutions to adapt and get to where this grand vision wants us to be.

Evolved ‘Classic Technologies’

There are many tools and technologies that have been around for years, and are still here. While we can’t list every single tool in this chapter, some technologies have evolved and kept up with the time, or found new relevance in our always-connected world.

Presentations

While PowerPoints have been around for a long time, technologies to let people talk about what they know has evolved as well. In the video below, Greg Dorrian, Manager of Learning Media at the University of New England, Australia demonstrates a Lightboard, a technology that allows instructors record presentations while drawing, diagramming, or annotating slides, all without breaking attention with the audience.

Response Systems

Classroom Response systems, or CRSs have been around for years. In the past they were called ‘clickers’, physical remote control devices that students would bring to class so they could respond to simple prompts in a lecture theatre with the results shown in a presentation (e.g., ‘Do you understand this concept?’, ‘What do you think? – True or False’).

Over the years, these systems have advanced to move online platforms such as PollEverywhere, Mentimeter and Kahoot, and have expanded to include mini-games and other audience participation tools. Many of these tools require interaction using a smartphone, which some users may not have, so other options have since popped up that allow for embedding these mini-polls and mini-games like Slido. Additionally, when using these systems in a physical space without slides, they are still valuable as a way to collect ideas or vote on a topic – as such a tool called Plickers (a play on ‘Clickers’) is also a very useful tool as it uses paper-printed QR (quick response) codes to allow students / participants to respond, with the teacher using their own device to ‘scan the room’ and collect responses.

Mindmapping

Another set of tools that has been around for a long time are Mindmapping tools (for more examples of tools check out this page). These tools allow students and teachers alike to express ideas through webs of related information, and can create and design them however they like. The example below is a map of how assignments across a series of classes may be used and built upon in other classes using Miro.

Adopting Emerging Learning Technologies

As with any technology, there is always going to be a learning curve with how we can use it and how students use it. Just like in the selection of learning technologies that are everywhere, we need to be mindful of the fact that we may not have the time to learn how to use it or even the expertise to implement it. This means that when students start to use it and we are unable to support their use, it’s not going to be a good learning experience for them.

“I never use a technology that’s smarter than me”

-a smart anonymous teacher

While some of the technologies in this chapter require a huge overhead in terms of investment of time and energy in learning how to use them, there are tools available that may provide ‘canned’ versions of the tools that we can use without much overhead, using templates and starter kits, or even existing educational apps on the hardware we already have to create interesting learning experiences.

Additionally, when pairing these types of technologies with trending and emerging pedagogical practices, these two interdependent practices can enhance learning.

The amazing disappearing learning technology

When using innovative and emerging technologies, or even established ones that rely on 3rd party software, this can present the challenge of having little control over that tool, so persistence and reliability is largely out of our control. After adopting more innovative software or technologies, we may show up to work one day to find that the company behind the tool we are using has gone bankrupt, has been purchased and absorbed by another larger company, or has switched to a paid model when they were free before. Usually learning technology companies will give fair warning to its users about changes so that they can plan accordingly, but this does happen, more often than we’d care for it to (e.g., Google Tour Creator). As a result, when assessing learning technologies for adoption, it’s important to consider its longevity as well as its affordances and feature sets.

Key Take-Aways

- Learning Analytics allows us to improve teaching and learning through the use of educational data, but we must be mindful of the judgements we make as educators based on the information we have access to.

- Artificial Intelligence is something to be mindful of, but it is yet to reach the ‘mainstream’ – that being said it will in the next few years, so having an understanding of what it is and how it works is valuable.

- VR and AR are definitely here and present numerous opportunities and challenges to their use in education.

- ‘Legacy’ technologies like badges and clickers have been re-purposed for an always-connected world.

Note that when considering the use of these technologies its always good to revisit their affordances, functionality and why they exist.

Revisit Guiding Questions

Consider the title of this chapter and answer it for yourself, or even try to sum it up in bullet points. “Current trends in learning technology include…”

- What did you learn about that you didn’t know before?

- How likely is it that you’ll be working with these technologies in your workplace or learning environment?

- Which technology are you most interested in using? How realistic will it be for you to implement such a technology, given its level of technical knowledge.

References

Corrin, L.(2021). Mind the Pedagogical ‘Gap’ when preparing staff to use learning analytics. NEXUS. Retrieved from https://www.solaresearch.org/2021/06/mind-the-pedagogical-gap-when-preparing-staff-to-use-learning-analytics/ on August 10, 2021.

Flavián, C., Ibáñez-Sánchez, S., & Orús, C. (2019). The impact of virtual, augmented and mixed reality technologies on the customer experience. Journal of business research, 100, 547-560.

Gibson, D., Ostashewski, N., Flintoff, K., Grant, S., & Knight, E. (2015). Digital badges in education. Education and Information Technologies, 20(2), 403-410.

Lang, C., Siemens, G., Wise, A., & Gasevic, D. (Eds.). (2017). Handbook of learning analytics. New York, NY, USA: SOLAR, Society for Learning Analytics and Research. (Open Textbook)

Lockyer, L., Heathcote, E., & Dawson, S. (2013). Informing Pedagogical Action. American Behavioral Scientist, 57(10), 1439–1459. https://doi.org/10.1177/0002764213479367

Palmisano, S., Mursic, R., & Kim, J. (2017). Vection and cybersickness generated by head-and-display motion in the Oculus Rift. Displays, 46, 1-8.

Further Reading

Aldowah, H., Rehman, S. U., Ghazal, S., & Umar, I. N. (2017, September). Internet of Things in higher education: a study on future learning. In Journal of Physics: Conference Series (Vol. 892, No. 1, p. 012017). IOP Publishing.

Bertrand, P., Guegan, J., Robieux, L., McCall, C. A., & Zenasni, F. (2018). Learning empathy through virtual reality: multiple strategies for training empathy-related abilities using body ownership illusions in embodied virtual reality. Frontiers in Robotics and AI, 5, 26.

Long, P. & Siemens, G. (2011). Penetrating the fog: Analytics in Learning and Education (Links to an external site.). EDUCAUSE Review 46 (5).

Segovia, K. Y., & Bailenson, J. N. (2009). Virtually true: Children’s acquisition of false memories in virtual reality. Media Psychology, 12(4), 371-393.

Watters, A. (2021). Teaching machines: The history of personalized learning. MIT Press.

Did this chapter help you learn?

No one has voted on this resource yet.

Provide Feedback on this Chapter